Supporting AML with machine learning

AI is a broad term covering multiple fields. For AML professionals, perhaps the most relevant subfield of AI is machine learning, which refers to the use of algorithms to continually improve a task, without the need for human intervention. Machine learning algorithms search for patterns within a given data set. Repeated recognition of patterns allows an algorithm to make ever more swift and accurate predictions.

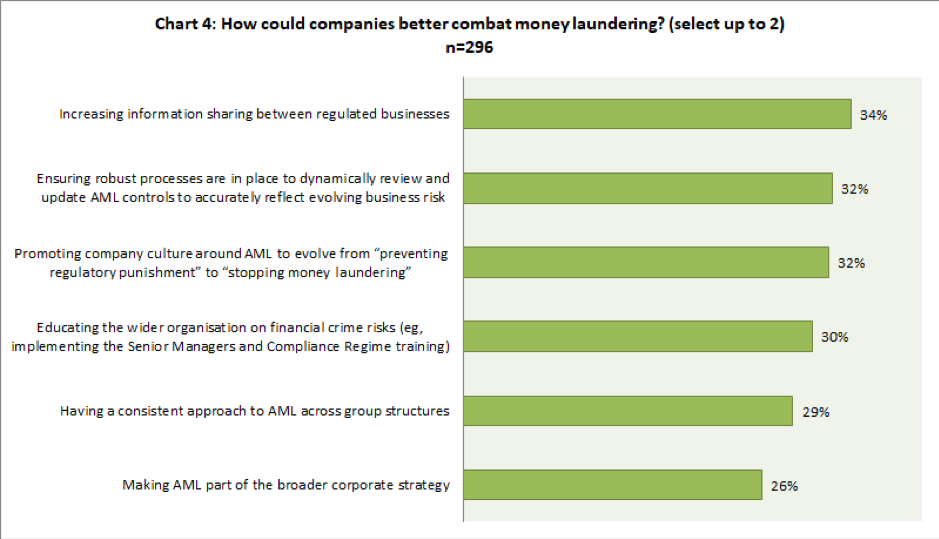

According to a survey of 296 UK-based AML professionals conducted by The Economist Intelligence Unit, the areas where respondents believe AI and advanced analytics can best be applied to combat money laundering are suspicious activity reporting (45%) and transaction monitoring (43%). Those respondents already deploying AI solutions for AML remain convinced that these two areas are where AI can add the greatest benefit. They also see good use for AI in relation to ongoing monitoring and management information.

“The rate of globalisation and digitalisation over the past 10-15 years has led to an exponential growth in the volume and velocity of transactions, and this has had a significant impact on banks and their ability to manage financial crime risk,” says Alex Meehan, head of financial crime intelligence and analytics at Nordea, the largest financial services group in Northern Europe. “Traditional rule-based transaction monitoring systems [a set of ‘if x then y’ rules] are limited in their ability to monitor complex behaviours. Banks are therefore looking for additional analytics-driven capabilities in detecting money laundering activity.”

Legacy, rule-based systems, used by financial institutions to monitor financial payments, generate a very large number of alerts and each one needs to be reviewed individually. However, on average, over 90% of alerts reportedly turn out to be false positives[1].

Nordea is currently piloting a system where alerts are run through a machine learning model, to help prioritise them for further investigation. “Our whole challenge was to make a decision on an alert that would have a higher confidence than that of a manual operator investigating that alert,” says Mr Meehan. “Although still in pilot, the results have been very positive. The next step for us is to use AI to identify unknown patterns of risk, outside of what has been identified in the rule-based monitoring system, and then run a form of champion challenger against the traditional rule-based system to build confidence in the new techniques.”

From ad hoc to fully integrated

Like other regulated sectors, gambling firms also rely on rule-based processes, which typically flag values above a certain threshold. According to Artur d’Avila Garcez, professor of computer science at City, University of London, whose work has involved analysing AML processes in the gambling industry, the rules need to adapt to new technologies. “These rules should be changing because the malicious user is adapting [its strategy] a lot more quickly than such rule-based systems. So that's where the AI can come in, to improve that prediction by using machine learning,” he explains.

As Mr Garcez notes, initially, AI technology can sit on top of legacy systems, with the different components exchanging information. But in the future, an integrated system would be more effective. “There is a lot of interest in end-to-end learning,” he says, referring to machine learning models that cover all the steps of a process, rather than just part of it. For the moment, interest is mainly confined to the research world, with financial institutions still under the constraints of running and maintaining their legacy systems, but things are evolving slowly with the development of “systems combining [first generation] symbolic AI and [more advanced] deep neural networks”.

Other potential uses of AI for AML include natural language processing (NLP), which allows computers to draw insights from unstructured data, such as emails, documents, images and video.

Mr Meehan identifies the onboarding of large, complex corporate clients and trade finance as two document-intensive areas where NLP could be particularly helpful. “A corporate entity can have a significant amount of onboarding procedures and have several documents to support a know-your-customer (KYC) review of that relationship,” he says. “These involve large volumes of unstructured data, sitting across multiple Word documents and PDF files. NLP could greatly speed up the review process of such documents by rapidly extracting context and relevance from such material. We haven't begun doing that yet [at Nordea] but you can definitely see opportunity there.”

Rolling out AI

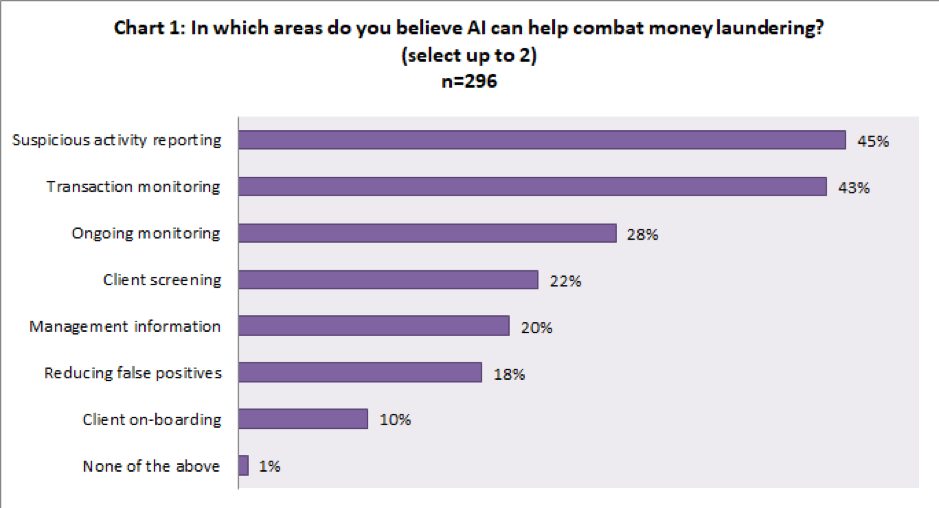

Regulated businesses are on their way to adopting AI technologies to combat money laundering. More than a third (37%) of survey respondents say they are already using AI or other advanced analytics, while more than two-fifths (41%) expect to do so in the next 1-2 years. Only a small minority (3%) have no plans to introduce AI.

Respondents from banks (40%), and in particular the larger banks (43% for banks with annual revenue > £1bn), the legal sector (44%), gaming firms (39%) and the larger fintechs (37% for fintechs with annual revenue > £500m), are the most likely to have already deployed AI technology for AML.

Fifteen percent of businesses are planning to deploy AI in the medium term (next 3-5 years), while twice as many real estate agencies (31%) are not expecting to roll out AI for at least three years.

Santander UK is trialling several AI tools to help combat money laundering, a task Raj Shah, the bank’s head of financial crime model definition and analytics, compares to “finding needles in haystacks”. The trial involves pilots of enhanced transaction monitoring models to refine the area and population in investigations of potential money laundering cases. By improving data accuracy, AI helps to increase successful outcomes from detection engines.

“AI can be used within the monitoring system itself. The models developed capture unusual behaviours that may not be spotted through traditional rules-based systems. This includes supervised and unsupervised machine learning, where the former requires prior knowledge of suspicious activity and the latter does not,” he explains.

Barriers and black boxes

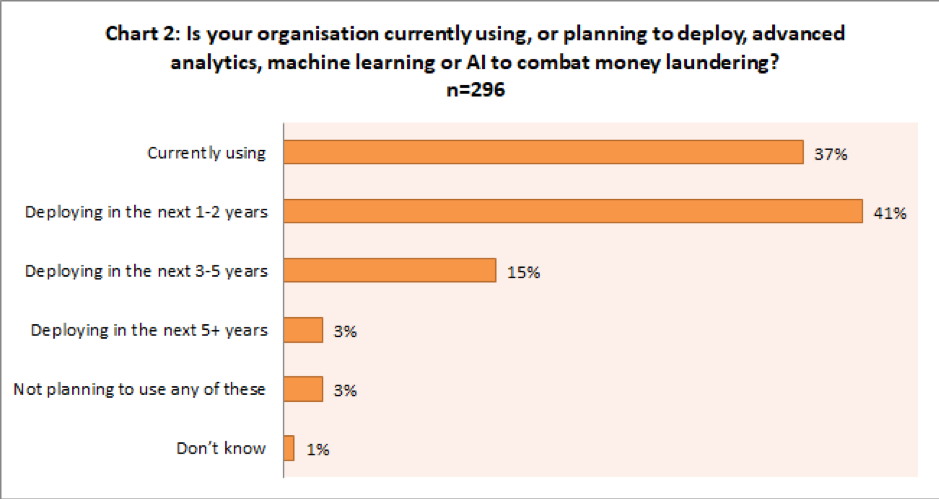

When asked to name the main barriers to the adoption of AI and other advanced analytics, there was a notable difference in the responses between regulated sectors. While a lack of understanding of AI by senior management is the main issue across all regulated sectors (34%), nevertheless, the chief concern for banks is that the Financial Conduct Authority (FCA) will find AI techniques unacceptable and may reject these on the basis that they lack transparency, with the inner workings not being clearly understood (selected by 39%). The same concerns around the regulator’s acceptance arise within the legal (34%) and real estate (37%) sectors, where this is also felt to be a major issue.

Fintechs are less worried about the regulator’s acceptance of AI. For them, the bigger issue is the lack of qualified compliance staff able to interpret the outcomes (33%), which is a concern shared by other regulated sectors and particularly those in the banking (36%) and legal sectors (34%).

Nevertheless, the FCA recognises the potential of AI and has made numerous positive statements about the use of AI in tackling financial crime. In her October 2019 speech, Megan Butler, the FCA’s executive director of supervision for investment, wholesale and specialists, said: “It is becoming increasingly apparent that disrupting financial crime in real time is a key frontier. This is certainly true of fraud – stopping it before it happens. AI and machine learning could really make the difference here.”

The regulator runs a “sandbox” programme, where firms can test new products, including AI tools, under supervised conditions on real customers. Five cohorts, including fintech start-ups and established banks, have participated in the programme since 2016, with a sixth cohort starting in 2020.

The regulator has also warned that AI technology is still in an early stage of development and so companies must be cautious about how and where they apply it. In particular, the FCA has highlighted potential issues related to explainability—the challenge of identifying why an algorithm made the decision it did.

“Many of the black-box approaches are not susceptible to reverse engineering, so we cannot track back how this decision was made,” says Georgios Samakovitis, a computer scientist specialising in AML technologies at the University of Greenwich. “And the way that regulation and law is built around analysing suspicious activity, especially when it has to do with criminal uses of money, often requires that we be able to articulate the grounds for a decision. So there is a big gap there.”

“In harnessing these technologies, it is of paramount importance that the bank can explain how they work and why the outcomes are in line with improved effectiveness for the bank and indeed for the customers [it is] protecting,” says Mr Shah, stressing that, at this early stage, AML controls should include both traditional rules-based detection and advanced analytics.

“Ensuring the regulator is comfortable with your approach and framework, as well as the substantive nature of where AI is used, is important to ensure the approach deals with legal, ethical and regulatory challenges,” he adds.

Santander is working with the FCA on observed pilots of centralised transaction monitoring across the industry, as well as on the detection of specific types of suspicious activity across multiple financial institutions.

“Phase one of both [internal and cross-industry] pilots have concluded and we are in discussions regarding feasibility studies for future development. In addition, we are exploring the use of this technology in sanctions screening outcome evaluation,” says Mr Shah.

Tricky predictions

Another potential limitation of AI is the fact that criminals are constantly adapting their methods. Mr Samakovitis says this is one of the key weaknesses of supervised learning: the system is less efficient when dealing with new fraud behaviour that was not present within the data used to develop and train the AI solution. There are more complex AI methods that can perform far better when faced with new behaviour, he explains, but “this is where you get into the black-boxing techniques”. These solutions are likely to feature “many layers of AI sitting one on top of the other and connected very often in convoluted ways,” he says.

According to Mr Samakovitis, developing collective intelligence for AML is critical. "Technology, particularly through recent advances in blockchain, is becoming increasingly able to bring that capability,” he says. New tools, such as a distributed ledger-based system using “smart-contracts” with inbuilt algorithms, are being created to simplify customer due diligence and KYC processes and allow financial institutions to record transactions on the blockchain. Alerts of suspicious activity would be sent to all stakeholders on the network and relevant transactions automatically halted for investigation.

“For blockchain technology to deliver robust performance, it will have to reach a maturity level that will satisfy the industry’s operational readiness and safety requirements,” says Mr Samakovitis.

However, despite the regulatory complications, Nordea’s Mr Meehan is optimistic about what he views as a significant new wave of technological development for AML professionals. “If you look at financial crime as an industry, it's actually quite a new one,” he says. “We're going through that next generation of anti-financial crime capabilities. The first iteration was all about rule-based, compliance-led controls, whereas what we are looking at now is more intelligence-led analytics capabilities.”

Plugging the data gap

One of the major constraints on the effective use of artificial intelligence (AI) to help combat financial crime is the lack of information sharing between organisations and the regulator. Currently any AI or advanced analytics tools used by regulated firms can only analyse information passing through a single organisation, while criminals are making use of the whole financial system to launder money.

“You've got criminality across multiple institutions, and banks will lose that view once it leaves their organisation,” says Alex Meehan, head of financial crime intelligence and analytics at Nordea.

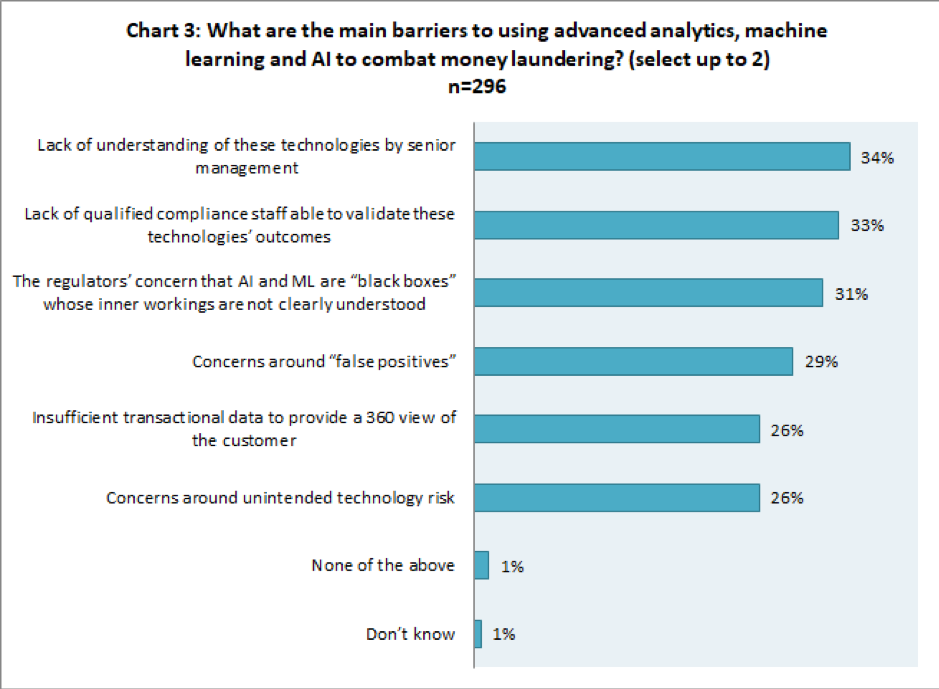

Among survey respondents who are deploying AI and advanced technologies, more than a third (34%) believe increasing information sharing between regulated businesses is key to improving AML efficiency. The Financial Conduct Authority has encouraged trials of data sharing as part of its sandbox initiative, but legal and competitive constraints currently prevent broader collaboration.